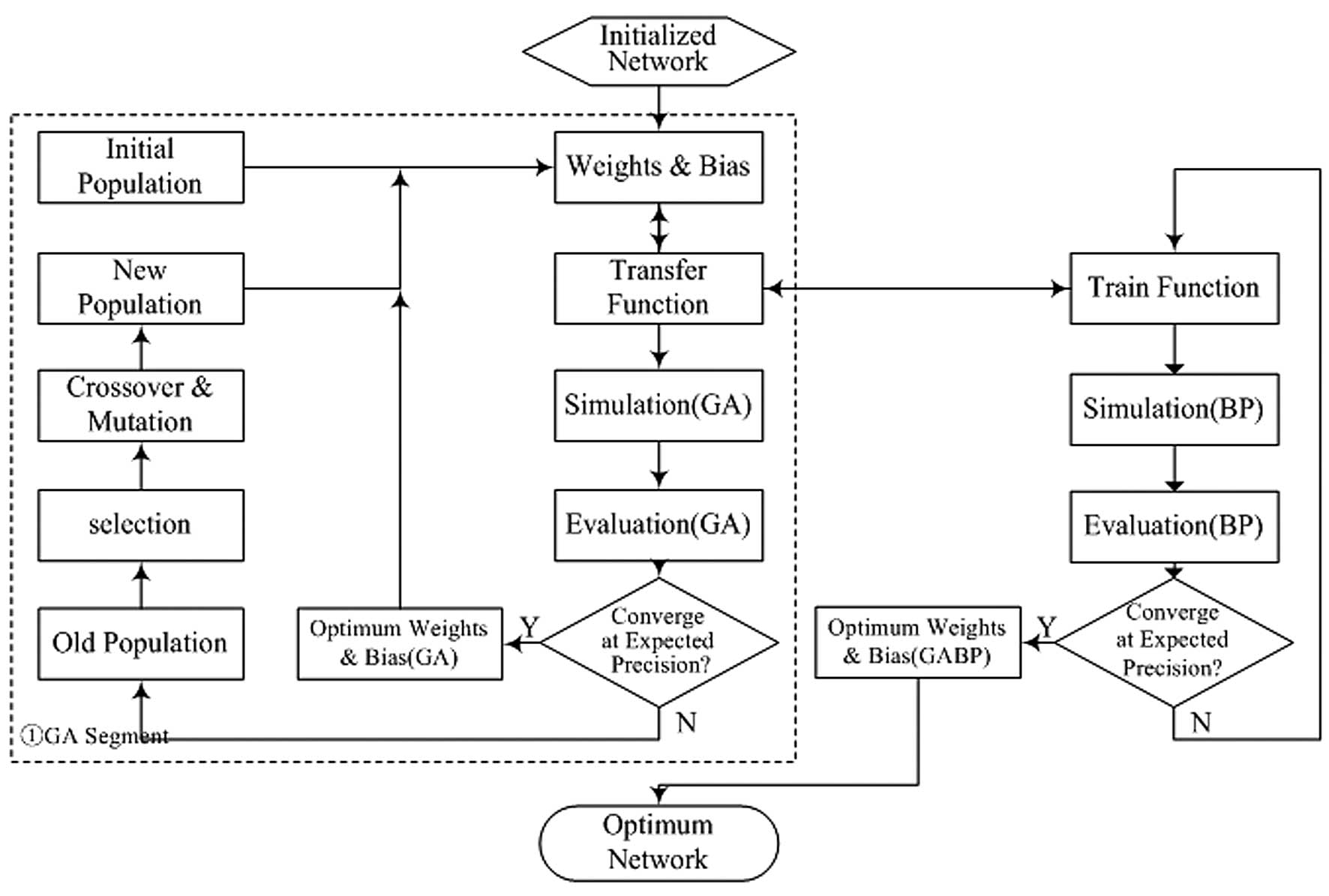

Flowchart For Back Propagation Algorithm

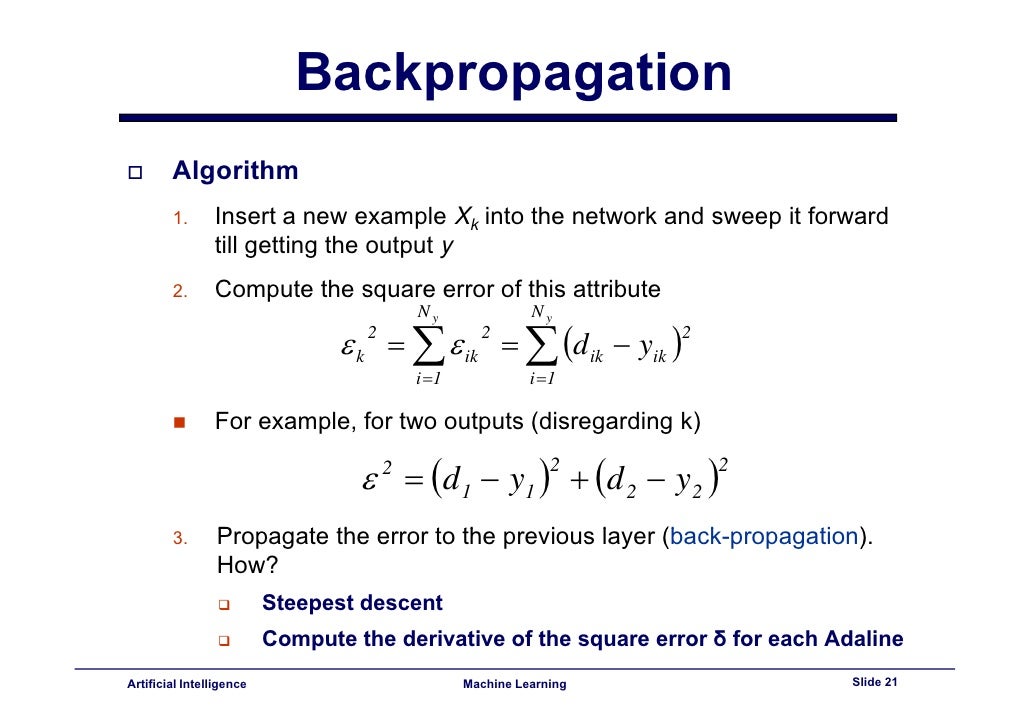

2 4 4 backpropagation learning algorithm the backpropagation algorithm trains a given feed forward multilayer neural network for a given set of input patterns with known classifications.

Flowchart for back propagation algorithm. Then finally the output is produced at the output layer. There is no shortage of papers online that attempt to explain how backpropagation works but few that include an example with actual numbers. This post is my attempt to explain how it works with a concrete example that folks can compare their own calculations to in order to ensure they understand backpropagation. It iteratively learns a set of weights for prediction of the class label of tuples.

The obtained results indicate that the ann algorithm coupled with back propagation neural network is an efficient and accurate method in predicting of surface roughness in turning. Backpropagation is a common method for training a neural network. It has been one of the most studied and used algorithms for neural networks learning ever since. When each entry of the sample set is presented to the network the network examines its output response to the sample input pattern.

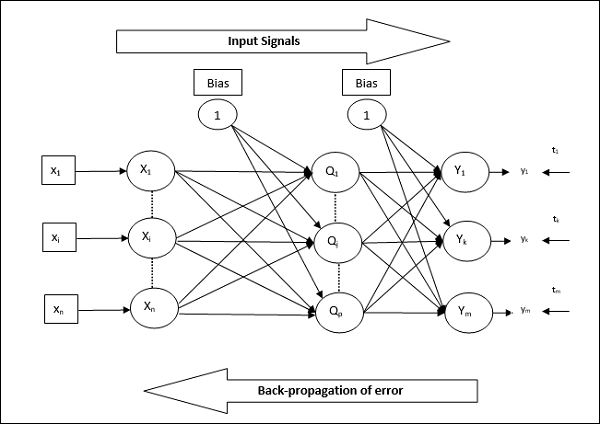

Back propagation in neural networks. We can define the backpropagation algorithm as an algorithm that trains some given feed forward neural network for a given input pattern where the classifications are known to us. Let s pick layer 2 and its parameters as an example. For us n 2 and m 4.

It functions on learning law with error correction. The same operations can be applied to any layer in the network. A multilayer feed forward neural network consists of an input layer one or more hidden layers and an output layer an example of a multilayer feed forward network is shown in figure 9 2. Back propagation requires a known desired output for each input value in order to calculate the loss function gradient.

The backpropagation algorithm performs learning on a multilayer feed forward neural network. At the point when every passage of the example set is exhibited to the network the network looks at its yield reaction to the example input pattern. The principle behind back propagation algorithm is to reduce the error values in randomly allocated weights and biases such that it produces the correct output. This numerical method was used by different research communities in different contexts was discovered and rediscovered until in 1985 it found its way into connectionist ai mainly through the work of the pdp group 382.

W is a weight matrix of shape n m where n is the number of output neurons neurons in the next layer and m is the number of input neurons neurons in the previous layer.